Generative tech is a lot more than LLMs

by Matt Nash, Co-Founder / CTO

A friend and respected colleague of mine is one of the most interesting folks I've ever met. He makes things all the time- ceramics, 3D prints, woodworking projects. Recently he visited to chat business and the state of Iowa's cornfields (same as ever, btw). He let me know he'd brought something with him- by his description, "baubles". I laughed aloud at that characterization. Trinkets, toys, whatever- I hadn't heard anyone use the word "bauble" in common parlance. As he extended his hand theatrically, I could see he really was holding what I would first describe as "baubles"- artfully crafted, colorful, interesting, but ultimately functionless little items to please the eye.

I've seen a lot of cynicism about LLMs, and I think it's understandable. Half my interactions with them are frustrating at best- any additional task complexity is a non-starter. It's not just math, either; simple inquiries like "who's in the movie I'm watching" result in confident hallucinations. When I point out these discrepancies to the model (don't ask me why, I guess I just feel compelled to), I get a perfunctory apology for "the confusion earlier". I know it's just an attention mechanism, but it sure feels like the model's publishers want to shrug off responsibility for their model output while capitalizing on its explosive adoption. They want to have it both ways, and If I were a cynic without much experience building learning systems, I would be unimpressed with this. It's a bauble; artfully crafted, colorful, interesting, but ultimately (mostly) functionless. I could see why you'd be tempted to extrapolate- if THIS is the best thing that vaunted AI companies can create, and they're ostensibly the best there is out there, then this whole AI boom is just a tourist trap on the way to whatever is actually next, like Big Gus (see below). It runs, but if your boss expected you to get to work on the back 40 with it, you'd have some challenges. It's sure not going to be writing its own code any time soon, even with the incredible genius that is Devin.

NOTE

What most folks don't realize is that these consumer-facing models don't NEED much brilliance to meet the needs of most users, so the companies creating them aren't optimizing them to be complex problem solvers. They don't need to be smarter than you to be worth adopting across your company- they just need to be smarter than the bottom third of your officemates. Take a little survey and plot that bell curve in your mind, and you start to see that complexity or accuracy aren't their strong suits, either. LLM publishers are several generations ahead of what's released to the public, and are focusing on speed and convenience, which is the right play. LLMs are going to keep getting better, and might eventually be the technical miracle every self-described expert (formerly blockchain experts) have been forecasting. But be warned: If you conclude that GenAI === current gen LLMs (wrong), and as a whole is a passing fad, incapable of anything meaningful, you're missing the point.

Trust me- it really, really is not a passing fad. Your AI strategy needs to encompass a lot more than just "How we're going to deal with staff using LLMs". Yes, I know for many of you, your company still hasn't even fully migrated to "the cloud" and you've faced too many mirages already. I know your mainframe is still the most important stack in the organization. I know that the holy court of your TECHNOLOGY REVIEW BOARD is still formulating their "strategy" to approach containerization, and that your boss's boss doesn't have any idea what they're even asking for when they demand an "AI Strategy". I'm sorry to say, it really doesn't matter- this is their "Miller's planet" moment, and if you're reading this, it's now your job to be the one to point out the impending wave if you want your company to have a chance.

LLMs are a sub-set of Generative AI technology, which is a sub-set of what can more broadly be called "AI" if we're comfortable using that term to mean neural networks or other complex learning systems. I hate the term in general- it's been abused rampantly and most of the discourse about it is based on some pedantic purity test where neither party is operating honestly. Expert systems, present in mainframes since their inception, are technically an approximation of human intelligence, and artificial. For our purposes (since of course our version of things is the only right one), we are talking about "advanced learning systems". It doesn't include rules engines, or remembered configurations in a mainframe. This definition also wouldn't include traditional linear or logistic regression models. Whether it includes stuff like Adaboost or random forests really depends on how spicy we're feeling on a given morning. The latter technologies mentioned here are unbelievably under-utilized and are essential stepping stones to build an "AI" competency in your industry, so don't skip them. They're just not what we're talking about today.

Generative AI includes transformers (LLMs), GANs (common for image creation), diffusion models (more image creation), and VAEs (broad applications). I'm leaving out as many as I've mentioned, and the examples are just illustrative to help you contextualize generative technology more broadly. For example, GANs have been used to generate conditional tabular databases instead of images. GANs and VAEs both have been able to generate a multitude of novel antibiotic candidates.

A survey of Generative AI for De Novo Drug Design

GenAI in drug discovery: Frontiers

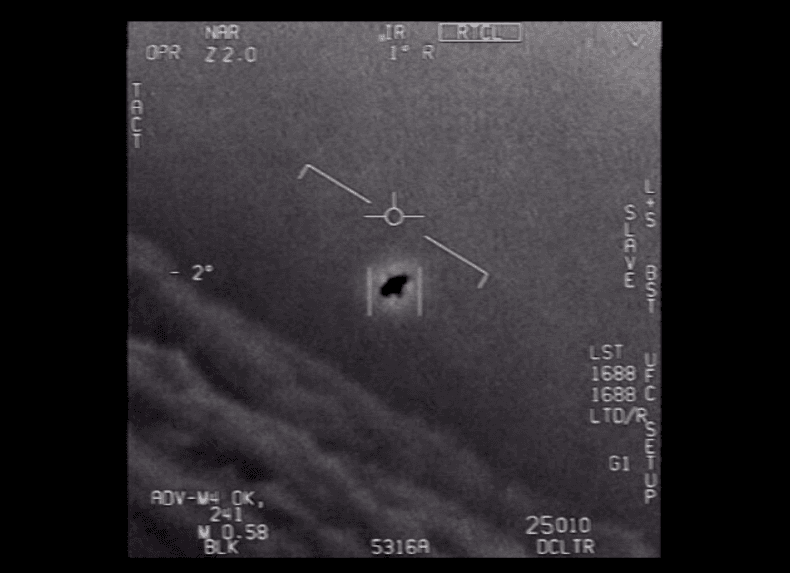

Quants are using GANs to simulate market pricing based on conditional constraint models. An earthquake on the other side of the planet would be a constraint condition for generative (synthetic) stock price models- which existing inference models might switch to temporarily for their efficiency frontier optimization without having to wait to see what's happening with impacted commodities. With generative technology, that future may be postulated every morning under any number of scenarios (if it's UAP disclosure, buy RTX and LMT) and the trading day simulated in living color, as though it were really happening in some alternate universe.

NOTE

Digital customers might be simulated to order cheeseburgers in fast food's next big marketing venture. Manufactured sensor data may be fed to our autonomous combat drones so they've learned the lesson of a million strenuous battles before they leave the factory. Their new response maneuvers might be emergent behaviors diffused from random noise, surprising the enemy with inhuman efficiency. What are your competitors doing? Yes, they may be as paralyzed as your organization- but they might also be composing their domain ontology so they can shape their data. They might be investing heavily in new partnerships to take advantage of what they learned from their ontology.

They might be evolving, not just operationally, but in their own product offerings. Disruption to established industry will be heavy and dense, and your company need to be positioned to endure that if you have designs to survive the coming decade of change. This might take a few years. Maybe 5, or even 10. During that time cynics and legacy technologists will continue to congratulate themselves for not "buying the hype", but be warned- the wave is coming.